Like Excel spreadsheets, data engineers will probably be ingesting CSV files until the heat death of the universe. It’s Death, Taxes, and problematic data formats. While not limited to CSV files, one problem that I keep encountering is the presence of control characters in CSV files. Picture this, you have an application that captures comments or other user provided text. The user hits the tab key, maybe the return key, while filling out a field and the application stores it as-is. The comment is then written to a CSV file during an extract/export step.

| Tip, sanitize all of your inputs |

CSV files support embedded control characters in strings – the string is typically double-quoted with the control characters embedded in the string. If you open the file in a text editor that doesn’t attempt any conversion then you may see single records broken up over multiple lines as the editor sees the control characters as end of line markers.

| Tip, do not use Excel or any other tool that ‘interprets’ the file when viewing prior to ingestion. It’ll obscure many issues that need to be addressed in your ETL. Excel will, at first glance, parse the following example correctly |

All parsers are not made equal. Some will correctly parse the control characters, some will garble the output, and crucially, some will garble the output without raising any warnings or errors! This last part is key, this is one way your data lake becomes a data swamp. So how do you know if your parser is up to the task?

Step One – Create a nightmare file!

The following creates a CSV file with a variety of data types. It has a text string with control characters in the middle of the record. This is important as parsing errors will impact the subsequent columns and records in the output.

import csv

import random

import string

from datetime import datetime, timedelta

def create_csv_with_random_data(filename, num_rows=20):

"""

Creates a CSV file with text and numeric columns, including a comment

field with a randomized presence of control characters (carriage returns,

line feeds, tabs), and additional columns for date, boolean, and category.

Args:

filename (str): The name of the CSV file to create.

num_rows (int): The number of rows to generate in the CSV file.

"""

with open(filename, 'w', newline='', encoding='utf-8') as csvfile:

fieldnames = ['id', 'name', 'value', 'comment', 'date', 'is_active', 'category']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writeheader()

for i in range(num_rows):

# Generate random data

row_id = i + 1

name = ''.join(random.choices(string.ascii_lowercase, k=8))

value = random.randint(1, 100)

# Create a comment with randomized control characters

comment = generate_comment_with_random_control_chars(row_id)

# Generate additional random data for new columns

date = generate_random_date()

is_active = random.choice([True, False])

category = random.choice(['A', 'B', 'C', 'D'])

writer.writerow({

'id': row_id,

'name': name,

'value': value,

'comment': comment,

'date': date.strftime('%Y-%m-%d'), # Format date as YYYY-MM-DD

'is_active': is_active,

'category': category

})

def generate_comment_with_random_control_chars(row_id):

"""

Generates a comment string with a randomized presence of control characters.

Args:

row_id (int): The ID of the row for which the comment is being generated.

Returns:

str: The comment string with randomized control characters.

"""

comment_parts = [f"This is a comment for row {row_id}."]

control_chars = ["\n", "\r", "\t", "\r\n", "\0"]

control_char_descriptions = {

"\n": "It has a line feed.",

"\r": "It also has a carriage return.",

"\t": "And a tab character.",

"\r\n": "Windows CR - LF line ending",

"\0": "Null character"

}

# Randomly add 0-3 control characters

for _ in range(random.randint(0, 3)):

char = random.choice(control_chars)

comment_parts.append(control_char_descriptions[char])

comment_parts.append(char)

comment_parts.extend(["Here is another line.", "\n", "And a final line."])

return "".join(comment_parts)

def generate_random_date():

"""

Generates a random date within the last year.

Returns:

datetime: A random datetime object.

"""

today = datetime.today()

one_year_ago = today - timedelta(days=365)

random_days = random.randint(0, 365)

random_date = one_year_ago + timedelta(days=random_days)

return random_date

if __name__ == "__main__":

create_csv_with_random_data("csv_nightmare.csv")

print("CSV file 'csv_nightmare.csv' created successfully.")Step Two – Test some common methods for loading data

Let’s load this file with a variety of parsers. I’ve chosen Pandas, the python CSV library, the python split() command, and two variations in Spark.

import csv

import pandas as pd

from pyspark.sql import SparkSession

from pyspark.sql.functions import col

# Can pandas parse the file correctly?

print("Pandas parser")

df = pd.read_csv("csv_nightmare.csv")

print(df)

# How about python csv library?

print("\nCSV library")

with open("csv_nightmare.csv", 'r', newline='', encoding='utf-8') as csvfile:

reader = csv.reader(csvfile)

for row in reader:

print(row)

# How about a simple split, no library?

print("\nSimple split")

with open("csv_nightmare.csv", 'r', encoding='utf-8') as file:

for line in file:

# Remove leading/trailing whitespace and split by commas

row = [cell.strip() for cell in line.strip().split(',')]

print(row)

# How about Apache Spark?

spark = SparkSession.builder.appName("CSVLoader").getOrCreate()

# Load the CSV file into a DataFrame

print("\nSpark default")

df = spark.read.csv("csv_nightmare.csv", header=True, inferSchema=True)

df.show(truncate=False)

# Load the CSV file into a DataFrame with the multiLine option

print("\nSpark with multiLine option")

df = spark.read.option("multiLine", "true").csv("csv_nightmare.csv", header=True, inferSchema=True)

df.show(truncate=False)

# Stop the SparkSession

spark.stop()So which ones worked?

| Pandas | Correctly parsed |

| CSV Library | Correctly parsed |

| Python split() | Nope! |

| Spark default | Nope! |

| Spark with options | Correctly parsed |

Let’s dig in on the two that did not parse the file correctly.

Python split() -> row = [cell.strip() for cell in line.strip().split(‘,’)]

['id', 'name', 'value', 'comment', 'date', 'is_active', 'category']

['1', 'pbuiesar', '97', '"This is a comment for row 1.Here is another line.']

['And a final line."', '2024-05-08', 'True', 'C']

['2', 'bqgnrkcf', '99', '"This is a comment for row 2.It has a line feed.']

['Windows CR - LF line ending']

['It has a line feed.']

['Here is another line.']

['And a final line."', '2024-08-03', 'False', 'A']I’ve selected the output from the first three records that were parsed. We have the header row that wasn’t skipped. That’s fine for this example as it shows us the expected list of fields. Looking at record id=1, we have two rows. Record id=2 is much worse with five rows output. This is three records in, eight records out. The data is completely garbled with the field order being all wrong.

| Tip, this is why we don’t write our own parser unless we really, really, really need to do so. I’ve seen multiple teams make this exact mistake by missing edge cases |

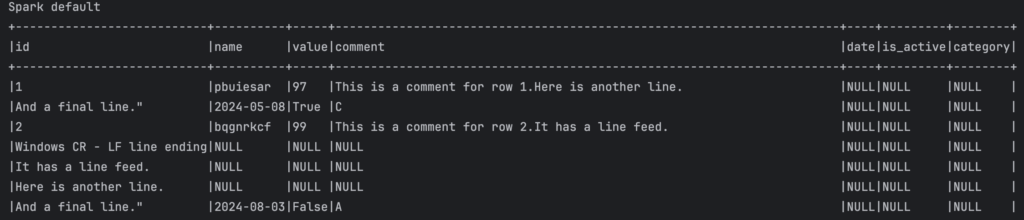

Spark with typical settings -> df = spark.read.csv(“csv_nightmare.csv”, header=True, inferSchema=True)

Using a screenshot to maintain the table format, we can see that the data spills over into multiple records with the date, boolean, and category data appearing in the wrong columns. No error is reported even though this is a garbage parse.

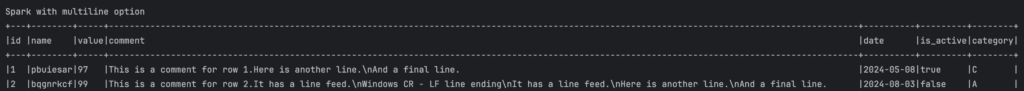

Spark with multiLine -> df = spark.read.option(“multiLine”, “true”).csv(“csv_nightmare.csv”, header=True, inferSchema=True)

With the multiLine option (I’m running spark 3.5.5, this syntax has changed over time) we get a good parse!

Conclusion

The example used here is obvious, but consider what happens if a small fraction of records have control characters. You’ll get weird data where columns will be unexpectedly null or strings contain odd text interspersed in your data. This can be hard to track down after the fact. So test your parsers and write test cases with gnarly embedded text in them to harden the entry points into your data solution.

Final thought, I didn’t include Unicode in this example but it’s another consideration. Can you parse and load an email address with umlauts or other diacritical marks? What about trade mark or other symbols in your text?